LLM Model Comparison for RAG Chatbots: Find Domain-Specific Options by Industry

Large language model (LLM) comparison is critical when building a RAG chatbot that drives your business success. Especially if your use case requires domain expertise, compliance, or reliable accuracy.

With dozens of new LLMs rolled out in the market over the last 18 months, businesses are left with more choices and confusion in selecting the best-fit option for chatbots. Our AI chatbot development company has built custom AI chatbots using major models like GPT-4o, Claude 3, and Gemini 1.5.

Whether you’re in healthcare, finance, education or any other sector — we break down which model can help create an AI chatbot for your business. This blog covers a detailed LLM model comparison, helping businesses like yours select the best domain-specific option.

Without further ado, let’s get practical insights into LLMs for chatbots and beyond.

Why LLM Model Comparison Matters for AI Chatbot Development?

Many companies are seeing strong results from large language models. However, others are facing setbacks due to rising compute costs, data issues, and poor model selection.

The wrong LLM selection can result in:

- Inaccurate or hallucinated responses

- Poor grounding in retrieved content

- Excessive costs or latency at scale

- Integration friction with vector databases or toolchains

That’s why LLM model comparison is non-negotiable, especially for highly regulated, high-accuracy sectors. Don’t get carried away by the hype. Find the model that works for you.

LLM Model Comparison: Finding Domain-Specific LLM for your Industry

Our hands-on comparison of five leading LLMs helps you select the right model for your specific business goals. Whether you’re building chatbots for education, finance, healthcare, or any other sector, this guide highlights which models align best with your industry use case.

GPT-4 / GPT-4o (OpenAI)

GPT-4o is OpenAI’s most production-optimized model, known for reliable grounding, strong function-calling, and robust ecosystem support. It’s best-suited for chatbots that require precise logic, multilingual handling, or quick deployment via API.

Businesses building SaaS copilots or legal assistants benefit from its ability to handle complex instructions with low hallucination. That said, the premium API pricing makes it more suitable for mature products or enterprise MVPs with defined ROI.

| Pros | Cons |

| Strong language understanding Low hallucination rates Function calling support | Closed source Cost can shoot at scale |

Ideal for: SaaS, B2B customer support, legal tech, and education

For the latest advancements in the OpenAI family, including deeper reasoning, improved tool integration, and agentic capabilities, see this full review of ChatGPT 5 (which builds on GPT-4o foundations)

Claude 3(Anthropic)

Claude 3 is known for its long-document reasoning, safety, and highly structured output. This makes it popular in industries that prioritize compliance — like legal research, patient education, or financial summarization.

Its balanced tone and strong grounding fidelity make it less susceptible to hallucination. While it’s only available via API, Anthropic offers a safer and conservative approach suitable for regulated environments.

| Pros | Cons |

| Extremely long context Ethical bias minimization High grounding precision | No open weights Smaller dev ecosystem than OpenAI |

Ideal for: Legal, finance, healthcare, and HR knowledge bases

Gemini 1.5 (Google)

Gemini 1.5 stand out for its massive context window — up to 1M tokens — which is perfect for chatbots that process lengthy PDFs, manuals, or datasets. It also handles multimodal input, supporting images, audio, and text.

Its integration with Google Cloud’s Vertex AI makes it an excellent option for enterprises already in Google’s ecosystem. However, it may feel less accessible to startups outside that infrastructure due to enterprise-centric tooling and access limitations.

| Pros | Cons |

| Unmatched context length Tight integration with Google Workspace Strong for complex retrieval tasks | Not fully open to all developers Fewer community integrations |

Ideal for: Enterprise R&D, Analysts, Healthcare, and Technical Documentation

LLaMA 3(Meta)

As a fully open-source model, LLaMA 3 offers a high-performance alternative to proprietary APIs. It’s ideal for companies that need data control, offline deployment, or model customization — such as government, finance, or enterprise IT.

It works well with open RAG frameworks like LangChain, vLLM, and Ollama. While it requires infrastructure and engineering setup, LLaMA 3 enables long-term cost-efficiency and deep integration flexibility.

| Pros | Cons |

| Customizable No API lock-in Works well with LangChain, LlamaIndex, vLLM | Requires infra/devops Prompt alignment less reliable than proprietary models |

Ideal for: Regulated industries, internal tools, and AI product startups

Mistral 7B / Mixtral (MoE)

Mistral models are fast, lightweight, and open-source — making them great for startups or developer tools. Mixtral, with its Mixture-of-Experts architecture, provides a promising performance-to-cost ratio.

While not as advanced in complex reasoning, it excels in speed, deployability, and integration with LangChain or Haystack. It’s effective for lightweight chatbots, MVPs, or use cases where rapid iteration matters more than deep semantic fidelity.

| Pros | Cons |

| Lightweight, easy to deploy Good LangChain + Haystack support Rapidly growing community | Not as strong in multi-step reasoning Requires careful prompt engineering for RAG consistency |

Ideal for: Budget-conscious builds, lightweight bots, and dev tools

Need help integrating GPT-4, Claude, or open-source LLMs into your product? You can hire AI developers to create scalable RAG chatbot solutions aligned with your domain and tech stack.

LLM Model Comparison Table: Select Model That Works for You

Choosing the right model depends heavily on the domain you’re operating in. Here are recommended LLMs by industry for your guidance:

| Model | Ideal For | Deployment Type | Cost Level | Why It Fits |

| GPT-4 / GPT-4o | SaaS, Legal, Education, Customer Support | API (OpenAI) | High | Great reasoning, deep LangChain support |

| Claude 3 | Legal, Finance, Healthcare, HR | API (Anthropic) | High | Long-context grounding, ethical safety, precise outputs |

| Gemini 1.5 | Enterprise R&D, Analytics, Tech Documentation | Cloud API (Google Cloud) | Mid–High | Longest token window, multimodal input, strong enterprise support |

| LLaMA 3 | Internal tools, Regulated Sectors, Startups | Self-hosted / OSS | Low | Open source, customizable, good ecosystem with LangChain & LlamaIndex |

| Mixtral / Mistral | MVPs, Dev Tools, Lightweight Bots | Self-hosted / Cloud Edge | Low | Cost-effective, fast, growing community support |

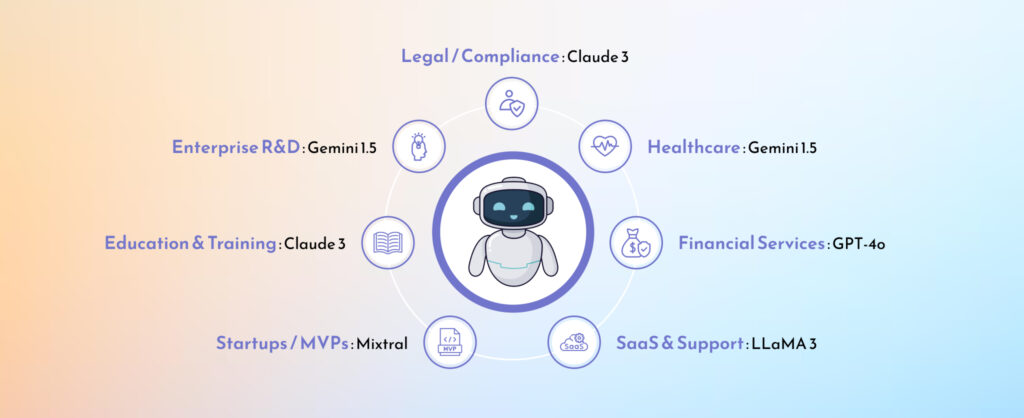

LLM Model Comparison: Real-World Use Cases by Industry

Let’s break down how different industries are using RAG chatbots — and why certain LLMs outperform others depending on the task, data type, and business goal.

Legal / Compliance

Law firms and regtech platforms use Claude 3 to summarize case documents, generate contract suggestions, or power internal legal assistants — all while maintaining structured outputs.

Healthcare

Gemini 1.5 is ideal for RAG bots assisting in patient education, clinical documentation, or medical research queries — thanks to its huge context window and accurate understanding of medical terminology.

Financial Services

GPT-4o powers financial assistants capable of surfacing reports, answering investor queries, or summarizing compliance docs — with built-in auditability and strong retrieval handling.

SaaS & Support

LLaMA 3 and GPT-4o enable customer-facing bots that can resolve product queries, generate support ticket summaries, and pull knowledge from dynamic help docs.

Enterprise R&D

Gemini’s 1M-token context window makes it ideal for bots analyzing research, technical reports, or internal wikis — all while keeping data private if deployed via GCP.

Education & Training

Claude 3 and Mixtral are used in personalized tutoring bots and LMS integrations where factual correctness and structured teaching logic matter.

Startups / MVPs

Startups prefer Mixtral or LLaMA 3 for building fast prototypes or lightweight assistants without paying for high-volume API usage.

Beyond model choice, frameworks like LangChain v1 provide durable workflows, middleware, and observability — ensuring that RAG chatbots are not only accurate but also production‑ready across industries.

Not seeing your industry? No problem. Our AI development company has partnered with industry-wide clients — HR, logistics, manufacturing, and other specialized sectors.

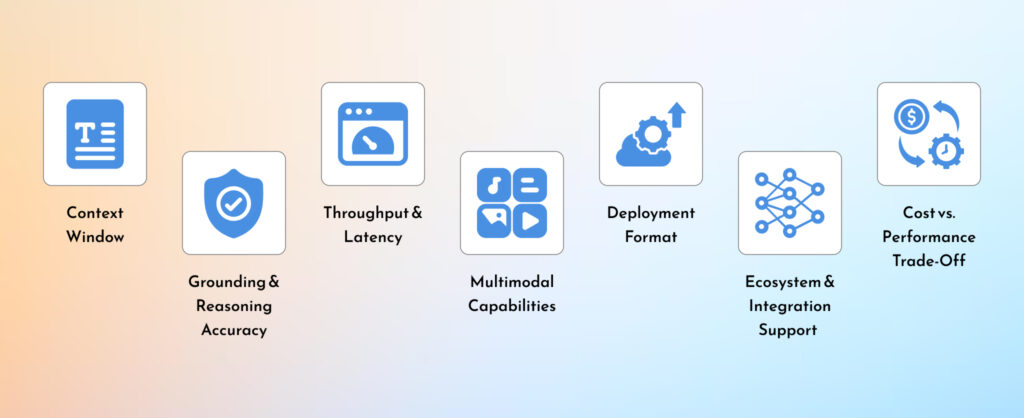

Key Factors That Influence LLM Selection for RAG Chatbots

When building a RAG chatbot, choosing the right model determines whether your solution will be accurate, scalable, and secure — or just another chatbot on the block.

Here are the 7 essential factors you should evaluate before making the decision.

1. Context Window (Token Limit)

The context window determines how much information the model can “see” at once. A larger window enables better understanding of long documents, multi-part queries, or overlapping context in customer interactions.

- Claude 3 Opus: 200K – 1M tokens (Pro use cases)

- Gemini 1.5 Flash: Up to 1M tokens

- GPT-4o: 128K tokens

- LLaMA 3 / Mixtral: 32K+ tokens

2. Grounding & Reasoning Accuracy

Does the model stay factual and relevant when answering from retrieved documents?

- RAG succeeds only when the LLM uses retrieved context effectively — not when it ignores it.

- Some models hallucinate even with good content retrieval.

- Claude 3 and GPT-4o are currently top performers in grounding.

- Use this factor to rule out flashy models that don’t follow instructions properly.

3. Throughput & Latency (Performance)

Throughput defines how quickly the model generates responses. This impacts the real-time experience in production systems — especially for chatbots, copilots, and AI agents.

- Gemini 1.5 Flash: ~190 tokens/sec

- o3-mini: ~188 tokens/sec

- Mixtral: ~220 tokens/sec

- GPT-4o: Fast, optimized for streaming

4. Multimodal Capabilities

If your use case involves more than text (images, voice, documents), multimodal support becomes essential. GPT-4o leads in this space with robust cross-modal understanding.

- GPT-4o: Text, image, video, audio (input/output)

- Gemini 1.5: Multimodal input (output limited to text)

- Claude 3: Primarily text (vision support in Claude 3 Opus)

At The Brihaspati Infotech, we help brands with a broad spectrum of AI solutions. From building custom AI solutions to enhancing your brand visibility, we have you covered.

5. Deployment Format (API vs Local Hosting)

Do you need a plug-and-play cloud API, or do you require complete control over infrastructure and data?

- API-Only Models: GPT-4o, Claude 3, Gemini 1.5: Easy to integrate

- Open Source / Local Deployable: LLaMA 3, Mixtral, Mistral 7B: Host on your infra, ideal for regulated or private deployments

Choose self-hosted for compliance-heavy industries (healthcare, legal, and enterprise R&D).

6. Ecosystem & Integration Support

Your model needs to plug into the rest of your RAG pipeline: LangChain, Haystack, Pinecone, Qdrant, and more. Ecosystem maturity is crucial for smooth dev workflows.

- Best Ecosystem Support: GPT-4o, Claude 3, LLaMA 3

- OSS Frameworks: Hugging Face Transformers, vLLM, GGUF, Ollama

Look for compatibility with your vector DBs, orchestration tools, and RAG framework.

A standout feature that supercharges ecosystem integration — especially with LangChain, vector DBs like Pinecone or Qdrant, and agentic RAG pipelines — is OpenAI’s native function calling. This allows models like GPT-4o to reliably call external tools, APIs, and custom functions, turning simple retrieval into full action-oriented workflows (e.g., database updates, CRM actions, or real-time calculations).

For a hands-on developer guide with code examples and best practices, check out our practical walkthrough: LLM Function Calling with OpenAI – A Practical Guide for AI Developers.

7. Cost vs. Performance Trade-Off

Even if a model performs well, its cost per usage can significantly impact scalability, especially in production environments with high traffic.

Here’s how cost factors into the choice of LLM:

| Model | Deployment Type | Token Window | Approx. Cost Level | Ideal For |

| GPT-4o | API (OpenAI) | 128K | High | Enterprise, SaaS, legal |

| Claude 3 | API (Anthropic) | 200K+ | High | Compliance, finance, healthcare |

| Gemini 1.5 | API (Google Cloud) | Up to 1M | Mid–High | R&D, technical analysis, enterprise |

| LLaMA 3 | Self-hosted (Meta) | 32K+ | Low (infra only) | Startups, privacy-sensitive apps |

| Mixtral / Mistral 7B | Self-hosted (OSS) | ~16K–32K | Low | MVPs, dev tools, cost-conscious |

- High-cost models are generally priced by input/output tokens.

- Low cost (open-source) models are free to use but require infra (GPU hosting, DevOps).

- API-based models are faster to deploy but can increase costs quickly with usage.

Select your model not just by benchmarks — but by what fits your business objective. Choose open-source LLMs when control and long-term cost-efficiency matter — and hosted APIs when speed-to-market is the priority.

Frequently Asked Questions

There’s no one-size-fits-all model. Your choice depends on factors like compliance needs, language complexity, and user volume.

Here’s what we usually recommend:

Legal, Healthcare: Claude 3

Finance: GPT-4o or Claude 3

SaaS / Support: GPT-4o

Internal Tools / MVPs: LLaMA 3 or Mixtral

Go through the LLM model comparison and find the best option for your use case. Or, we can help you choose the best LLM for your industry-specific chatbot.

Contact Us

Yes, in many cases. A domain-specific LLM—either fine-tuned or paired with a RAG system—can deliver more relevant, accurate, and compliant responses.

General-purpose LLMs like GPT-4 or Claude can still work well when paired with high-quality retrieval, especially in industries like legal, healthcare, or finance.

Typically, we recommend you answer the following questions:

1. What type of content will the chatbot handle (FAQs, research, internal docs)?

2. How important is compliance, accuracy, or explainability?

3. Do you prefer API-hosted or locally deployed models?

4. Will the LLM need to integrate with tools like LangChain?

If you are still unsure about LLM selection, connect with our AI chatbot development company for expert guidance and implementation.

Absolutely. LLaMA 3, Mistral, and Mixtral are powerful open-source models used to build private, domain-specific RAG chatbots. Combined with vector databases and frameworks like LangChain or LlamaIndex, they give you full control over data privacy and cost.

Need expert help? Hire our AI developers to build custom, domain-specific chatbots tailored to your business needs.

RAG enables your chatbot to use real-time knowledge from your internal content (PDFs, docs, etc.). It enables AI chatbots to generate accurate, grounded responses — without retraining the base model.

This makes RAG a smarter, faster, and more scalable alternative to fine-tuning in many domains.

Let’s Select Best LLM for Your Chatbot

Today’s market offers a wide range of LLMs. Don’t rush the process. Instead, spend time to understand capabilities of the models available and your business needs.

Our LLM model comparison can guide you through key stages of the process. The best LLM isn’t the one with the most tokens or the highest benchmark — it’s the one that fits your domain, scale, and technical context.

- Looking for high speed and scale? Use GPT-4o.

- Looking for more control? Go with LLaMA 3 or Mixtral.

- Looking for extensive document understanding? Claude 3 or Gemini 1.5 will deliver.

To see how these concepts come together in practice, our AI Chatbot Guide (2025) walks you through step‑by‑step examples.

Need help choosing or implementing the right LLM? At The Brihaspati Infotech, we help startups and enterprises deploy scalable, domain-specific AI chatbots using OpenAI, Gemini, and other LLM models.

Stay Tuned for Latest Updates

Fill out the form to subscribe to our newsletter