LangChain v1 Is Here: Build Faster, Smarter AI Agents

Artificial intelligence agents are no longer just experimental demos. They’re becoming the backbone of modern applications. From building chatbots for customer support to enterprise knowledge assistants and workflow automation, businesses are racing to harness AI in ways that feel natural, reliable, and scalable.

At the center of this movement is LangChain. It’s a framework that lets teams integrate language models, tools, and memory into powerful applications. Early versions unlocked creativity — but often left developers navigating fragmented patterns and fragile deployments.

With the LangChain v1 release, the game shifts from experimentation to execution. The framework leaps from flexible but friction-heavy to production-grade and business-ready. For the first time, LangChain gives teams the tools to build SaaS-grade AI applications in-house — with speed, stability, and control. For teams delivering artificial intelligence development services, this means having the durability, observability, and governance they need to deliver agents that work reliably at scale.

This release isn’t just about new features — it’s about turning ideas into execution. No more prototypes that collapse under pressure. In this blog, we’ll break down what’s new, why it matters, and how LangChain v1 update helps your business build faster, smarter AI agents — ones that feel ready for the real world.

LangChain v0 in Review: Where It Fell Short

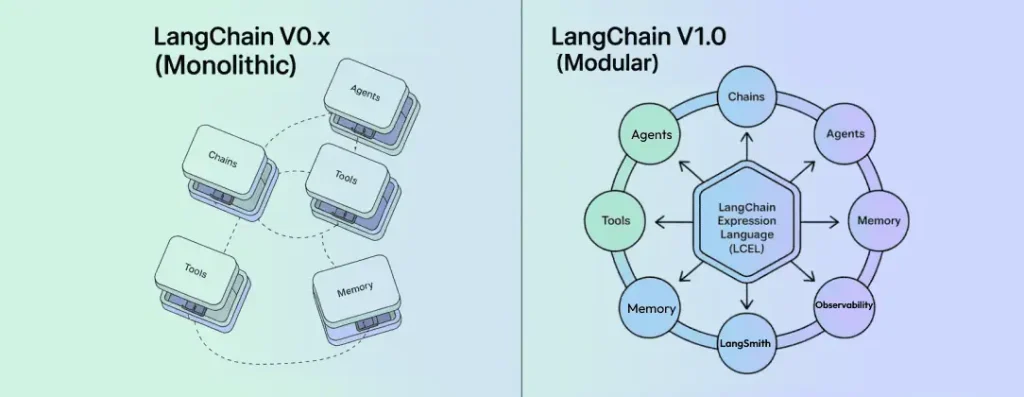

Before the LangChain v1 release, developers depended on the early LangChain agent framework to experiment with chatbots, knowledge assistants, and automation workflows. Although powerful, LangChain v0 often felt more like a toolbox than a true platform.

Teams had access to chains, agents, tools, and memory. What was missing?

Structure, consistency, and production-ready applications.

LangChain v0 exposed real friction:

- Too many ways to build agents: Overlapping patterns confused teams and slowed onboarding.

- Complex workflows were fragile: Multi-step logic worked in demos, not always in real systems.

- Low observability: Debugging was hard. Monitoring was limited.

- Scaling was painful: Great for experiments. Not great for enterprise launch.

In short, v0 sparked innovation but made execution hard. It created high friction for businesses aiming to deliver SaaS‑grade AI applications quickly.

LangChain v1 update directly addresses these gaps. It replaces trial-and-error with architecture. Guesswork with reliability. And prototypes with production-ready systems.

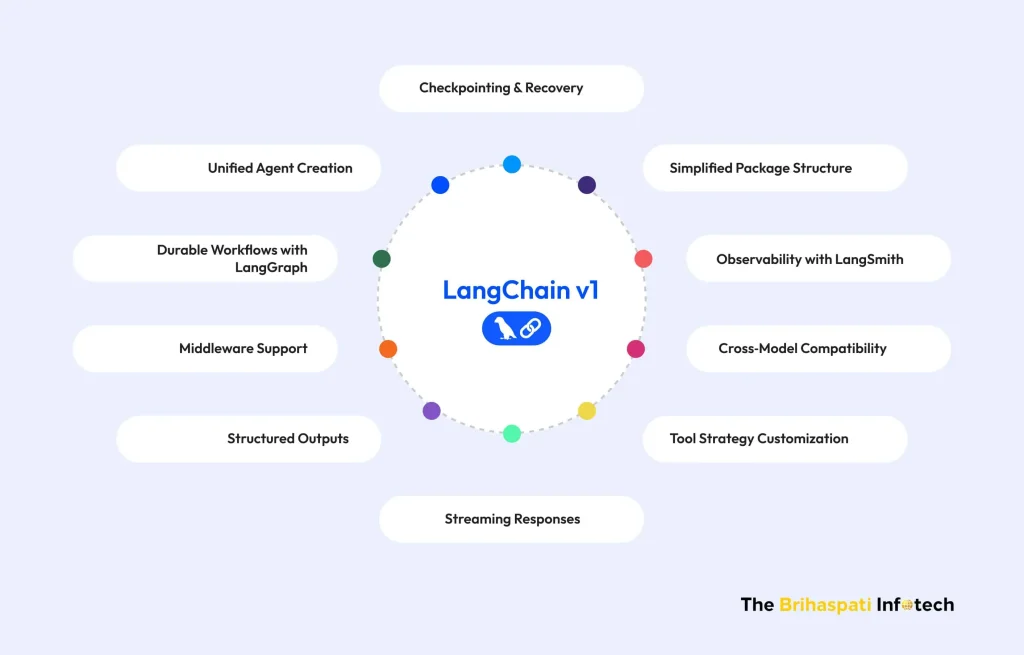

What’s New in LangChain v1 and Why It Matters?

The LangChain v1 release transforms the LangChain agent framework into a production‑grade platform. The update introduces a clear structure for building agents, adds resilience to workflows, and provides the flexibility teams need to scale AI applications with confidence.

1. Unified Agent Creation

LangChain v1 release introduces a single create_agent API that replaces fragmented methods from v0. This unified approach ensures developers follow one consistent path, reducing confusion and improving collaboration across teams.

By consolidating agent creation into a standardized process, documentation becomes clearer, and projects gain a stronger foundation for scaling.

What This Means for Your Business:

- Faster onboarding and development cycles: Companies can accelerate development cycles by removing complexity and lowering onboarding costs.

- More predictable AI behavior: Predictable agent behavior also reduces risk, making it easier to scale SaaS‑grade applications with confidence.

- Scalable, SaaS-grade foundations: Standardization improves collaboration across teams, ensuring enterprise projects remain consistent and easier to maintain over time. It gives you the confidence to grow from MVP to production without rebuilding.

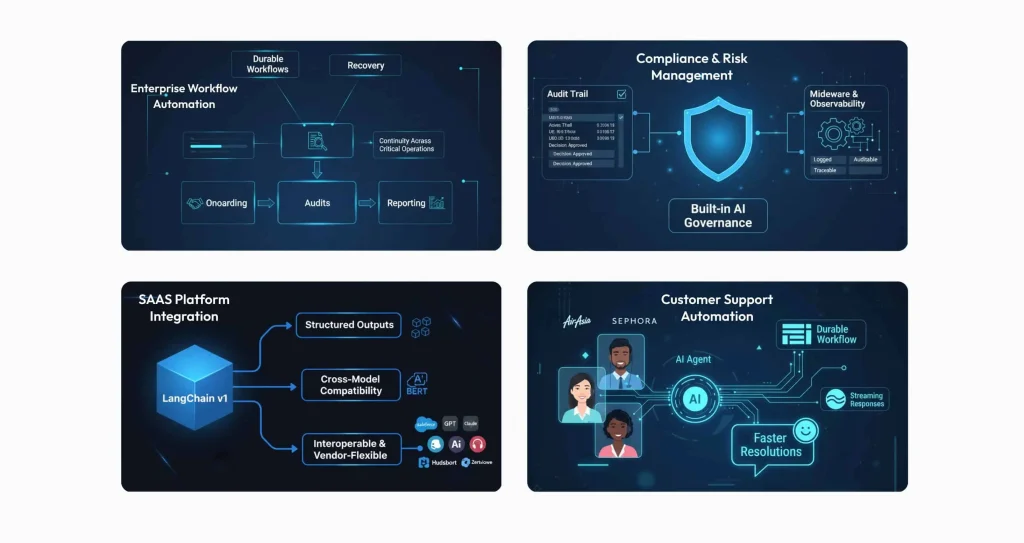

2. Durable Workflows with LangGraph

LangChain v1 update is now powered by LangGraph. Agents can now pause, resume, and checkpoint tasks, allowing long‑running workflows to continue without breaking. This durability ensures processes remain stable even when interrupted or scaled.

This introduces resilience into multi‑step logic, making agents capable of handling complex enterprise workflows without collapsing under pressure.

What This Means for Your Business:

- Higher operational reliability: Enterprises can rely on agents for mission‑critical tasks like compliance checks or audits.

- Stronger customer trust: Reduced downtime and operational risk translate into higher reliability and stronger customer trust.

- Minimized risk of data loss: Continuity in workflows means businesses can confidently deploy agents in production environments without fear of losing progress or data.

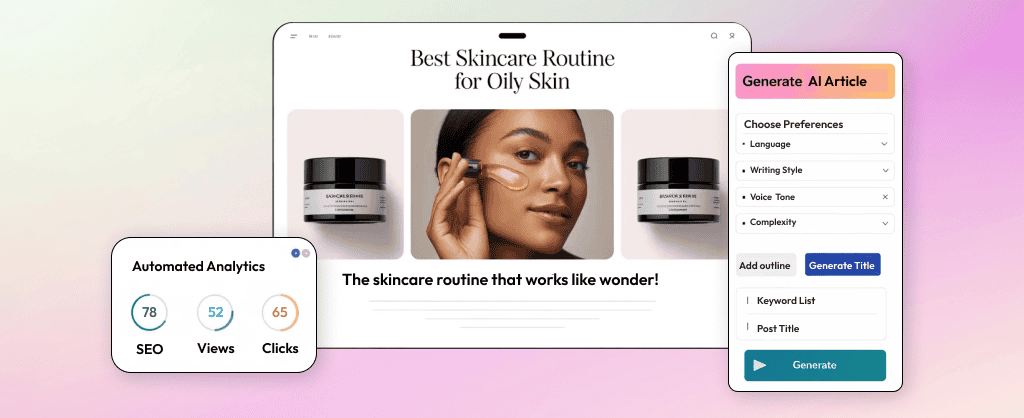

These durable capabilities are already delivering real results in production environments. For example, we’ve built a LangGraph-powered AI report generator that automates complex SEO workflows—handling multi-step analysis, contextual decisions, and reliable outputs—saving agencies over 15 hours per week.

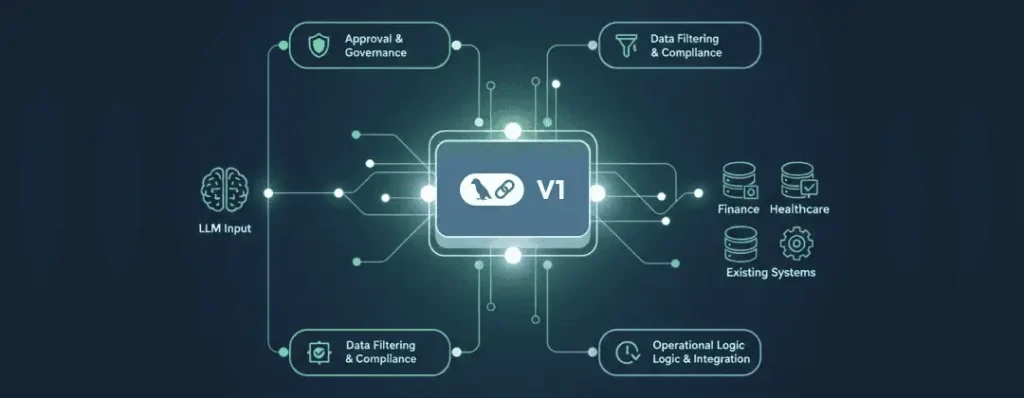

3. Middleware Support

LangChain v1 release introduces middleware hooks that let developers inject custom logic into agent workflows. This means you can seamlessly add approvals, filters, logging, or other business rules at key decision points — without breaking the overall agent architecture.

It gives teams the power to adapt agent behavior to real‑world constraints while maintaining a clean, maintainable framework. This flexibility echoes the role of function calling in extending LLM capabilities, which we’ve explored in detail in our OpenAI function calling guide.

What This Means for Your Business:

- Built-in governance and compliance: Businesses can enforce governance policies directly within workflows, improving accountability and compliance.

- Industry-specific flexibility: This makes AI adoption safer for industries with strict regulations. Ideal for finance, healthcare, legal, or any regulated environment.

- Better integration with operations: Middleware also allows companies to tailor agent behavior to their operational needs, ensuring smoother integration with existing systems.

4. Structured Outputs

LangChain v1 release introduces content_blocks — a standardized format for agent outputs across different LLM providers. This ensures outputs are consistent, predictable, and easy to parse, no matter which model you’re using.

Structured outputs make it easier to integrate agents into downstream systems like databases, CRMs, or internal tools — with far less custom logic.

What This Means for Your Business:

- Cross-provider flexibility: Switch between LLM providers without rewriting business workflows or retraining teams.

- Lower engineering costs: Consistent outputs reduce the need for custom handling or rework during scale.

- Simplified maintenance: Enterprise applications become more stable, predictable, and future-proof.

- Faster integration: Outputs fit cleanly into existing systems, speeding up time to production.

5. Streaming Responses

Agents can stream outputs in real time, delivering partial results before tasks are fully complete. This improves responsiveness and creates a more interactive user experience.

Streaming ensures that users don’t have to wait for long processes to finish before seeing progress.

What This Means for Your Business:

- Improved user experience: Customer‑facing applications like chatbots or support tools feel faster and more engaging, improving satisfaction.

- Reduced churn and frustration: Real‑time feedback builds trust and reduces frustration caused by latency, which is critical for SaaS‑grade adoption.

6. Tool Strategy Customization

Developers can fine‑tune how agents select and use tools, including prioritization and fallback strategies. This customization improves efficiency and decision‑making.

Agents can now adapt tool usage to specific workflows, ensuring smarter execution.

What This Means for Your Business:

- Workflow efficiency: Organizations can optimize workflows for specific business needs, reducing wasted compute costs and inefficiencies.

- Tailored automation: Smarter tool usage ensures agents deliver more reliable results, strengthening enterprise confidence in automation.

7. Cross‑Model Compatibility

LangChain v1 update standardizes APIs across LLM providers, making it easier to swap models without rewriting workflows. Hybrid deployments are now supported seamlessly.

This flexibility allows businesses to future‑proof their applications against rapid changes in the AI ecosystem. It also mirrors the importance of choosing the right domain‑specific model for RAG chatbots, which we’ve broken down in our LLM comparison blog.

What This Means for Your Business:

- Vendor flexibility: Companies gain freedom to choose the best model for each task, reducing dependency on a single vendor.

- Reduced switching costs: Cross‑model support lowers negotiation risks and ensures applications remain adaptable as the market evolves.

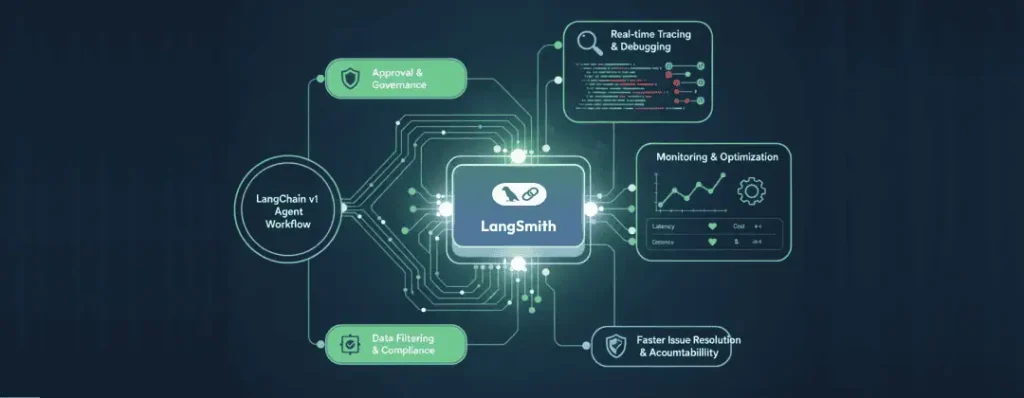

8. Observability with LangSmith

LangChain v1 release integrates with LangSmith, a powerful observability toolkit that enables real-time tracing, debugging, and monitoring of agent behavior. Developers can visualize how decisions are made, track tool calls, and identify bottlenecks — all with detailed transparency.

This transforms agent development from guesswork to data-driven iteration.

What This Means for Your Business:

- Faster issue resolution: Proactive debugging reduces downtime and improves reliability, which is critical for enterprise adoption.

- Continuous optimization: Data‑driven insights allow businesses to continuously refine workflows, ensuring compliance and accountability.

9. Simplified Package Structure

LangChain v1 release introduces a clean separation of core and legacy functionality. Core functionality has been streamlined into langchain, while legacy features are moved to langchain‑classic. This reduces clutter and makes the framework easier to navigate.

Developers benefit from a cleaner, more intuitive package layout that simplifies upgrades.

What This Means for Your Business:

- Faster team onboarding: New teams can adopt LangChain faster with a simplified structure, lowering entry barriers.

- Lower maintenance costs: Existing projects benefit from clearer upgrade paths, reducing maintenance overhead and long‑term costs.

10. Checkpointing & Recovery

LangChain v1 update enables checkpointing, allowing agents to save their state mid-task. Agents can save state mid‑workflow, allowing recovery after crashes or interruptions. This ensures continuity in long‑running or complex tasks.

Checkpointing provides resilience for enterprise processes that cannot afford failure.

What This Means for Your Business:

- No lost progress: Agents can recover from disruptions without compromising the task or user experience.

- Stronger system reliability: Critical workflows can continue without data loss or incomplete tasks, strengthening reliability at scale.

- Higher stakeholder confidence: Enterprises gain confidence that agents will perform consistently, even under unexpected conditions.

More than an upgrade, LangChain v1 sets the stage for the next era of AI development. By combining structure, resilience, and flexibility, it allows organizations to innovate faster and deliver applications that are truly ready for the demands of the future.

Practical Applications of LangChain v1

The true value of LangChain v1 isn’t just in technical updates. It’s in how these features solve real business problems. Move beyond prototypes. Deploy agents that are resilient, compliant, and scalable.

- Customer Support Automation: Durable workflows and streaming responses let agents handle complex queries without breaking mid-conversation. This contributes to faster resolutions, happier customers, and lower support costs. Case studies like AirAsia and Sephora show the same impact, which we’ve analyzed in our blog on AI agents for customer support.

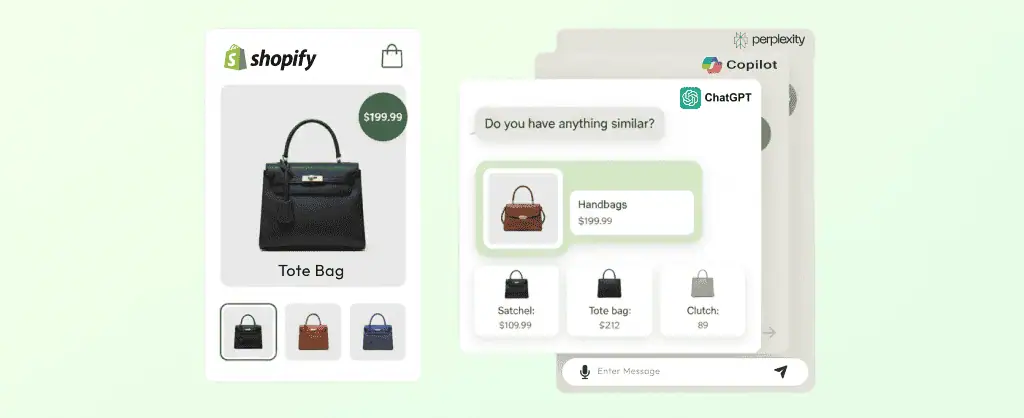

- These reliable, multi-turn conversation capabilities make LangChain v1 ideal for building production-grade agents that maintain context over long interactions. These same agent capabilities also power full in-chat shopping experiences on Shopify, where agents guide customers through product discovery, recommendations, and purchases seamlessly. See how this works in practice: How to Sell in AI Chats with Shopify Agentic Commerce?

- Compliance & Risk Management: Middleware and observability tools ensure every AI decision is logged, traceable, and auditable. Governance is built in, making AI adoption safer and more reliable — even in highly regulated industries.

- SaaS Platform Integrations: Structured outputs and cross‑model compatibility streamline embedding into SaaS products. The framework is interoperable, vendor‑flexible, and built for growth.

- Data Analysis & Insights: Tool strategy customization and checkpointing enable reliable processing of large datasets. This delivers actionable insights without interruptions and supports smarter decision‑making across teams.

- Enterprise Workflow Automation: Recovery combined with durable workflows ensures multi‑step processes like onboarding, audits, and reporting run seamlessly. This reduces manual effort and guarantees continuity across critical operations.

With LangChain v1 release, organizations move confidently from experimentation to production. It’s a true cornerstone for AI solutions that are not just innovative but enterprise ready.

Frequently Asked Questions

Unlike v0, which was more experimental, v1 introduces a unified agent creation API, durable workflows, middleware support, structured outputs, and improved observability — making it production‑ready.

If your organization is exploring how to leverage these capabilities, our AI development agency can help architect and implement enterprise‑grade solutions tailored to your business needs. Let’s talk!

Yes. Structured outputs and cross‑model compatibility make it easy to embed agents into SaaS platforms, ensuring interoperability and vendor flexibility. Beyond technical fit, v1 reduces integration friction by standardizing outputs across providers, which means teams can scale features without rewriting workflows.

This makes SaaS products more adaptive, future‑proof, and ready to deliver AI‑powered experiences to customers.

If you’re looking to accelerate SaaS integration with AI, our experts can help design and implement solutions tailored to your platform. Get in touch

Enterprises can use Langchain V1 for customer support automation, compliance workflows, SaaS integrations, large‑scale data analysis, and multi‑step workflow automation.

To bring these applications to life, hire top-rated AI engineers who can accelerate implementation and ensure your solutions are tailored to business needs.

LangChain v1 in Action: Building Reliable AI Agents

A custom AI agent built with LangChain v1 is more than just a technical update — it’s a powerful way to deliver reliable workflows, enforce governance, and unlock enterprise‑scale personalization. By combining durable workflows, structured outputs, and observability, organizations can transform how their teams build, deploy, and trust AI systems.

At the same time, agents are part of a broader ecosystem of enterprise AI adoption. Our AI Development company helps brands across industries design intuitive, business‑centric solutions that drive engagement, efficiency, and long‑term growth.

Looking to explore more? Our blog on Custom AI Agents Development with OpenAI Agent Builder dives deeper into practical examples, showing how enterprises can design agents that adapt across industries and deliver measurable impact.

Ready to discuss your own AI agent project? Contact us today and discover how we can tailor enterprise AI solutions to your business needs.

Stay Tuned for Latest Updates

Fill out the form to subscribe to our newsletter