LLM Function Calling with Open AI: A Practical Guide for AI Developers

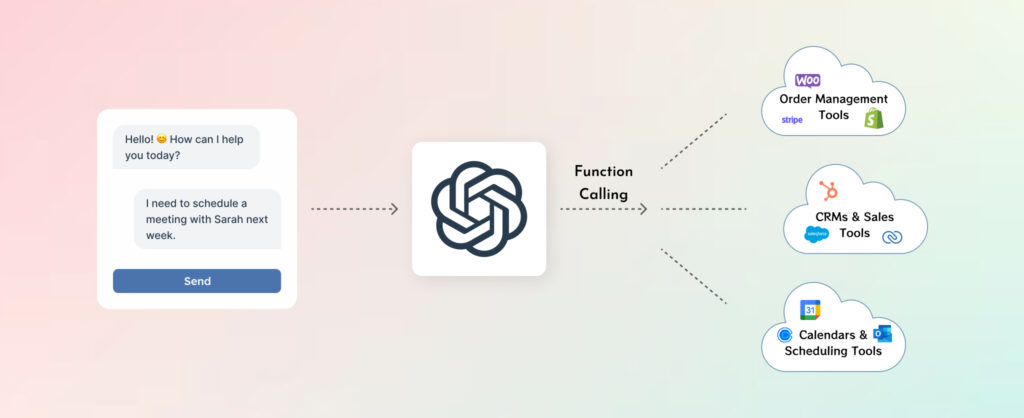

Large Language Models (LLMs) are no longer just chatbots — they’re becoming software interfaces. With OpenAI function calling, developers can now connect large language models like GPT-4 to real-world systems: APIs, tools, databases, and internal workflows.

This means users can do more than chat. At our AI chatbot development company, we build intelligent assistants that not only respond — they act. Using LLM function calling, these chatbots can schedule meetings, pull real-time data, and trigger automated workflows.

In this guide, you’ll learn what LLM function calling is, how OpenAI function calling works, where it shines, and how to use it securely. Let’s break it down.

What Is LLM Function Calling & Why Does It Matter?

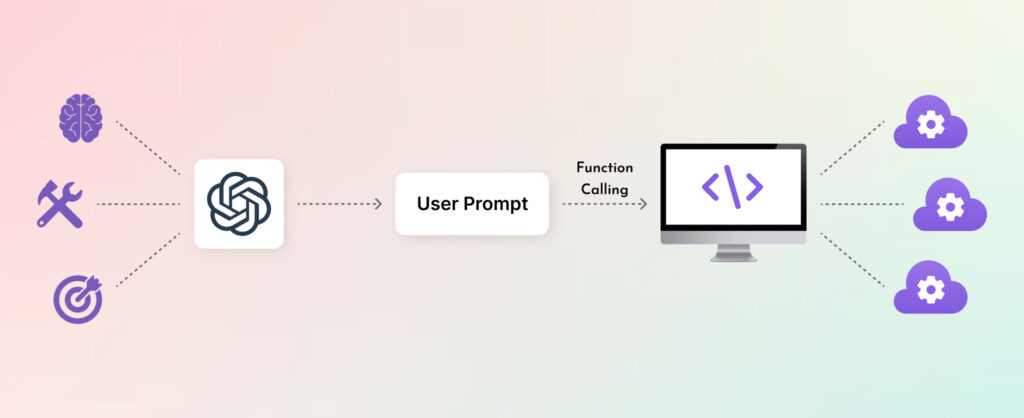

Function calling allows LLMs like GPT-4 or GPT4o to take user intent and map it to a structured function — a piece of code you’ve exposed — that performs a real action.

Rather than generating answers as text alone, the model can say: “This prompt means I should call this function, with these arguments.”

Why it matters:

- Triggers live actions instead of static responses

- Automates real-world tasks from a natural language interface

- Connects AI to your tools, systems, and workflows

Example: A user asks, “Send a follow-up email to Amanda about the new contract.” The model doesn’t just suggest text — it fills and triggers your send_email() function.

How LLM Function Calling Works with OpenAI?

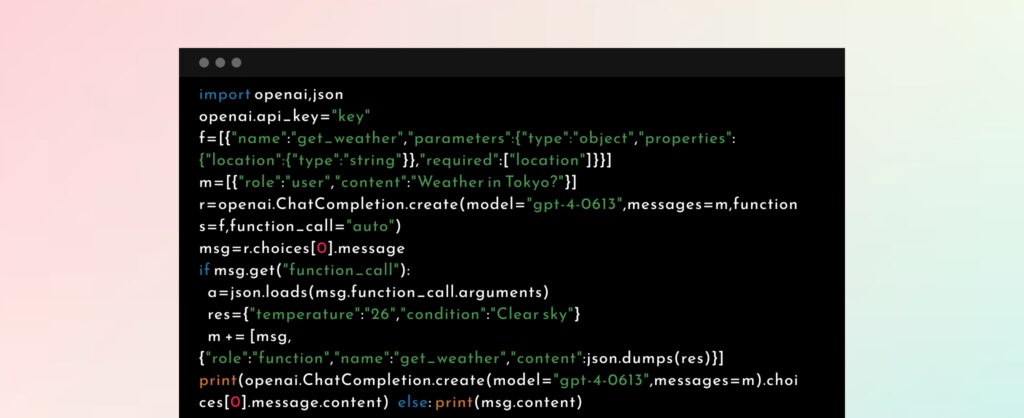

OpenAI’s API introduced this pattern by letting you define what functions your model can access. Here’s how OpenAI function calling works:

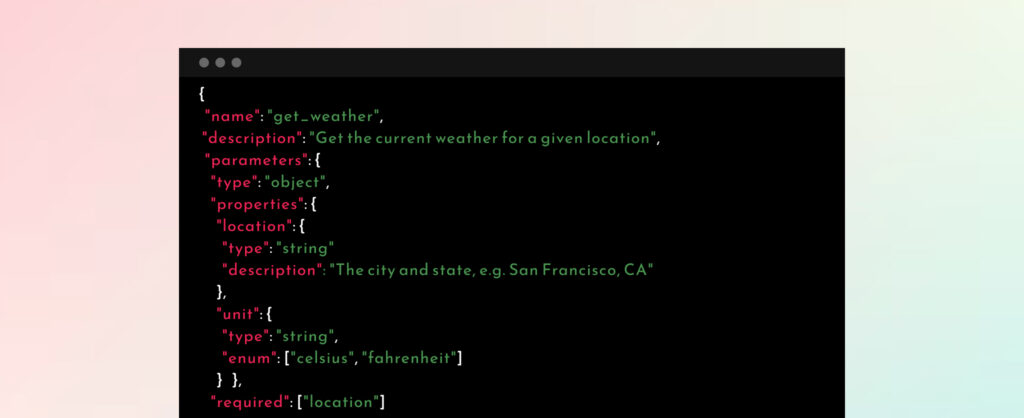

Step 1: Define function schema

Use a JSON schema to describe available functions — including their names, parameters, and descriptions.

This defines the function’s name, parameters, and usage — so the model knows what’s available.

Step 2: User Asks a Question (Prompt Submission)

“What’s the weather like in Tokyo right now?”

The model determines that it needs to call the get_weather function.

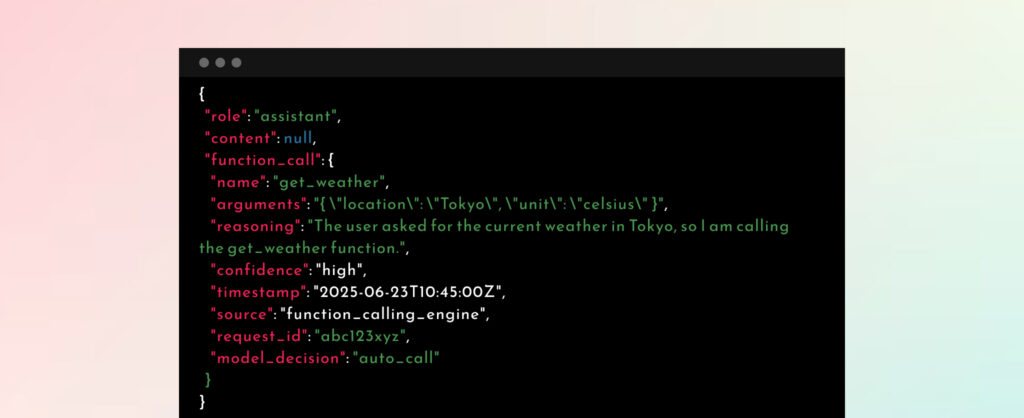

Step 3: GPT Model Returns a Structured Function Call

The model chooses to call the get_weather function and fills in the parameters: city: Tokyo

Step 4: Backend Executes the Function

You handle this step — connecting to an API or internal logic.

Step 5: Results Sent to GPT

You return the output in the next API call. The model wraps the result in a natural, user-friendly reply.

“It’s currently 23°C and sunny in Tokyo.”

With function calling, GPT becomes more than a chatbot — it becomes part of your backend logic. Our AI development Agency helps you integrate GPT into your systems to automate workflows, trigger real-time actions, and deliver smarter, personalized experiences for your users.

LLM Function Calling vs RAG: What’s the Difference and When to Use Each?

Function calling and RAG (Retrieval-Augmented Generation) are both powerful tools — but they solve different problems.

Function Calling triggers real-world actions (e.g. sending an email, calling an API, updating a CRM).

RAG helps the model respond accurately by retrieving relevant documents or data before generating a reply.

| Function Calling LLMs | RAG | |

| What it does | Triggers real-world actions or fetches live data | Retrieves documents or facts to provide grounded answers |

| Why is it used | To connect with internal systems, tools, or APIs | To improve response accuracy with up-to-date or private knowledge |

| Input format | JSON schema for available functions | Embedded documents for retrieval |

| Use Case | Book appointments, pull data, trigger APIs | Answer policy questions, summarize reports |

Choosing between (or combining) these approaches depends on your specific domain and requirements. For a full comparison of leading LLMs (including GPT-4o, Claude, LLaMA, etc.) optimized for RAG chatbots across industries like legal, healthcare, finance, and more, check out our LLM model comparison for rag chatbots.

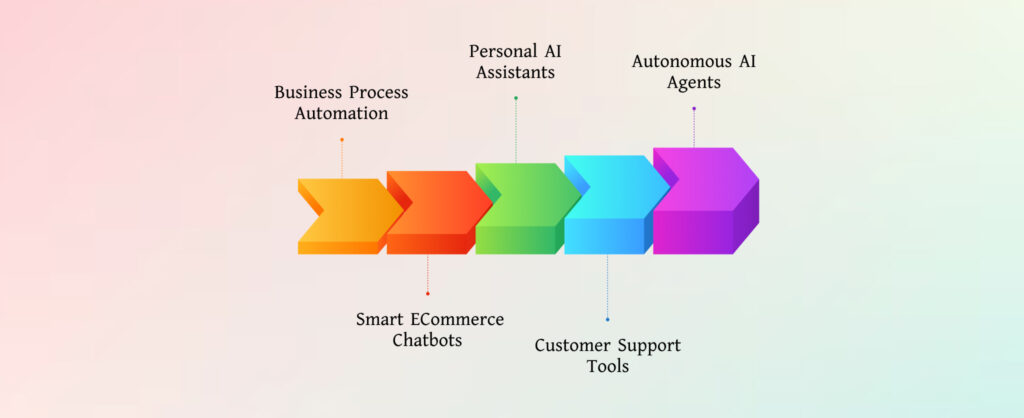

Real-World Use Cases for LLM + OpenAI Function Calling

LLM function calling is already powering business-critical applications. Here are some prominent examples:

Personal AI assistants

These assistants go beyond surface-level chat. They interact with calendars, email clients, CRMs, and task managers — all through natural language.

For instance, instead of asking “What’s on my calendar?” — users say “Schedule a call with Sarah next Tuesday,” and the LLM handles the rest via API.

Business Process Automation

Function calling turns GPT into a front-end for internal operations, automating routine tasks through voice or text commands.

For instance, departments like HR, finance, or operations can run internal processes via chat: “Submit leave request,” “Generate expense report,” or “Update payroll records.”

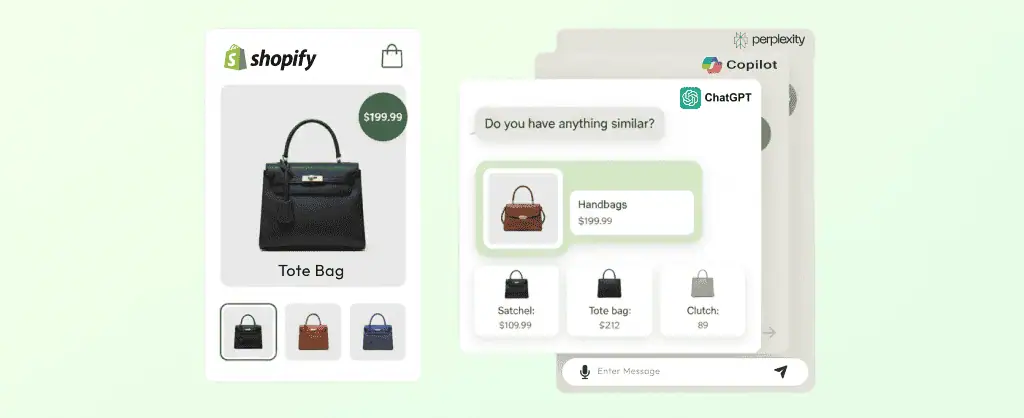

Smart eCommerce Chatbots

Function calls allow OpenAI to pull real-time data from the internal database. For instance, Customer queries like “Where’s my order?” or “Cancel item 3” can trigger backend calls to check order status, process cancellations, or issue returns.

Customer Support Tools

Customer service chatbots enhanced with function calling can escalate tickets, query databases, and push actions to support CRMs like Zendesk, Salesforce, or Freshdesk. To see how these capabilities scale in enterprise environments, LangChain v1 introduces durable workflows, middleware hooks, and observability — making customer support agents more reliable and production‑ready.

Autonomous AI Agents

This is where things evolve: combining LLM function calling, RAG, and memory, these AI agents complete multi-step tasks without human intervention.

These agents can:

- Use tools (via function calling)

- Retrieve context (via RAG)

- Store session history (via memory)

- Make stepwise decisions (via planners or agents)

Ready to build one yourself? Follow this step-by-step 2025 guide to creating a production-ready OpenAI RAG chatbot — it covers everything from setting up embeddings and vector databases to integrating real-time knowledge retrieval and conversation memory for reliable, context-aware responses.

Limitations of LLM Function Calling (and How to Avoid Them)

Function calling with large language models (LLMs) is powerful. But without guardrails, it can introduce serious risks. Here’s what to watch out for — and how to stay ahead of the pitfalls.

1. Cost Can Shoot Quickly

Function calls often involve multiple GPT calls and external APIs. Each layer adds usage costs, especially with nested workflows.

How to address it:

- Cache repeatable responses if deem possible

- Implement lightweight function logic where GPT is not essential

- Track token + API usage at a per-function level

3. Latency Adds Up

Each handoff — GPT prompt, response parsing, API call, response return — adds time. A multi-step function call can easily introduce noticeable delays for users.

How to address it:

- Keep functions modular to isolate and diagnose slow steps

- Use async processing for non-critical actions

- Show progress indicators in the UI

4. Trust & Safety Risks

A malicious or poorly worded prompt could hit something critical — or expose a security gap. Blindly executing invalid prompts could lead to a disaster.

How to address it:

- Add role-based restrictions to be extra careful

- Always validate inputs before passing them to any function

- Whitelist allowable function names or parameters

4. API Dependency & Fragility

External APIs can directly impact your function chain, especially if they run slow or act unpredictably. Worse, GPT might not know what went wrong or how to recover.

How to address it:

- Monitor function-call failure rates in production

- Add fallback responses and error handling

- Retry failed API calls with exponential backoff

Function calling is a leap forward in how LLMs interact with business systems. So, implement it with calculated steps to unlock productivity.

The Future: Function Calling + Agentic LLMs Working Together

Function calling is the first step toward fully autonomous LLM-based agents.

Instead of one-shot tasks, agents use GPT function calling in series to achieve a goal. They:

- Break down a single task (user goal) into multiple jobs

- Choose which function to use for each job

- Create output (response) after processing all function calls

Understand with Example: “Book me a meeting with Alex next week and send a summary of our last call.”

The AI agent might do multiple jobs simultaneously:

- Query your calendar

- Retrieve past meeting notes (via RAG)

- Draft and send an email

- Confirm with a summary

This agent loop continues until the job is done.

Here’s how it typically works:

| User sets a goal, not just ask a question | LLM thinks through & breaks it into sub-tasks | The agent calls functions dynamically | Loop continues until the goal is achieved |

| “Book me a meeting with Alex next week and send a summary of our last conversation.” | 1. Retrieve calendar availability 2. Find the last meeting notes 3. Compose an email 4. Schedule the event | 1. Query a calendar API 2. Search meeting transcripts (RAG) 3. Call a scheduling function 4. Send the output back to itself | The LLM may make multiple function calls before crafting the final output. |

Together, function calling and agentic behavior transform how we think about AI systems. Instead of single-turn chatbots, you can get intelligent agents that can reason, decide, and take meaningful actions based on user goals.

These agentic capabilities are already evolving rapidly in newer models — for the latest on ChatGPT 5’s deeper reasoning, unified routing, enhanced tool connectors, and remaining limitations, check out this detailed review of ChatGPT 5 pros and cons.

Frequently Asked Questions

Yes, absolutely. Function calling depends on well-structured data formats, like JSON, to represent the arguments for the functions the LLM can call.

Looking to implement advanced chatbot solutions? Our custom AI chatbot development company can help you build AI-powered chatbots capable of handling complex data, automating workflows, and enhancing customer experiences.

Yes, function calling can be used with private/internal systems. It allows AI models to interact with your own systems and data, enabling more complex and automated tasks. The model only generates the call request — all execution happens securely in your environment.

OpenAI function calling is available in specific versions like gpt-4-0613, gpt-4-1106-preview, and gpt-3.5-turbo-0613. Verify model compatibility in OpenAI’s documentation before implementing.

Need expert assistance? Hire AI developers to implement OpenAI function calling securely and build custom AI solutions tailored to your business needs.

Absolutely. In fact, the most powerful applications combine:

1. RAG for grounding the model in accurate knowledge

2. Function calling for triggering real actions

3. Memory for context persistence across sessions

This combination creates robust, agent-like systems that are useful and intelligent.

If the model’s function call doesn’t match a defined function or the provided schema, the function call is not triggered, and the model treats the input as a standard text-based prompt, returning a typical text-based response instead. This ensures flexibility in handling varied input types.

Need Help Implementing LLM Function Calling with OpenAI?

Function calling LLMs changes the role of language models from text generators to interactive interfaces. We help businesses design, integrate, and scale AI solutions using OpenAI tools.

Want to identify the right model for your industry? Our LLM Comparison Guide highlights domain‑specific choices that maximize ROI and engagement.

Whether you’re building internal agents, smart chatbots, or automated workflows — we provide top-tier AI-powered solutions using OpenAI and other AI models.

At The Brihaspati Infotech, we offer

- LLM architecture and API planning

- Function schema design and integration

- Secure, production-grade implementation

Ready to implement function calling? Schedule AI Consultation and get started.

Stay Tuned for Latest Updates

Fill out the form to subscribe to our newsletter